You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Hi James,

Many thanks for that. It is wonderful. It looks like you have already got the first survey job.

Can I ask how many fields there would be if we want to 10degree gaps between RA and Dec centres with individual field at 12 x 12 deg size?

I ask this because I am thinking perhaps (incorrectly) that the most likely imaging setups will either be a 135mm lens or a 200mm lens with fuil frame sensor.

A 12 x 12 deg field can be easily achieved by 2 x pane mosaic for 135mm lens (closer to 15 x 15 deg) and by a 4 x pane mosaic for a 200mm lens.

For both lens operated at the same f/ratio, the 200mm will get to the required depth per pane quicker, because of binning to the required spatial resolution. With a 135mm lens we are at (or very close to) the proposed resolution already.

People who who a 85mm - 100mm lens can do at least a 12 x 12 deg field in one pane; the down-side is the will have to so a bit longer per pane and drizzle to get down to 10arcsec/pix.

All in all, I think 12 x 12 fields at 10deg centres maximises the efficiency for as wide a range of focal lengths. It also means slightly less fields.

What do you reckon? Note that I am trying to establish common terms to describe the various scales: panes (determined by observing set up ) , fields (determined by the survey team) and the sky (defined by the Universe)

For survey to be a success we need to successfully mosaic panes into a field, which I propose is dome by the individual observer. At most it is likely to be up to 4 panes, which with existing software tools should not be too challenging.

It is the next step - mosaicing the fields into the sky which I am not sure about. I started thinking no - to keep things simpler, but the discussion on this thread has made me realise just how much more useful and impactful the survey would be if we could do it. There are significant challenges here, but in the spirit of collaboration demonstrated above by James is the anyone willing to volunteer to try to lead this part of the effort?

Clear skies

Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Apologies for all the blank messages. I was trying to post the message above, but getting a weird text echo repeatedly.

I finally realised I was using a character or character combo, but I couldn't fully delete the past messages.

This perfectly demonstrates just how out of my depth I am when it comes to computers!

CS Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

No need to apologize. One time I typed a long message and the page lagged; I had to exit the tab and go back or something. The entire thing got automatically deleted.

Another time I made a embarrassing grammar mistake. So we all go through this on AB.  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

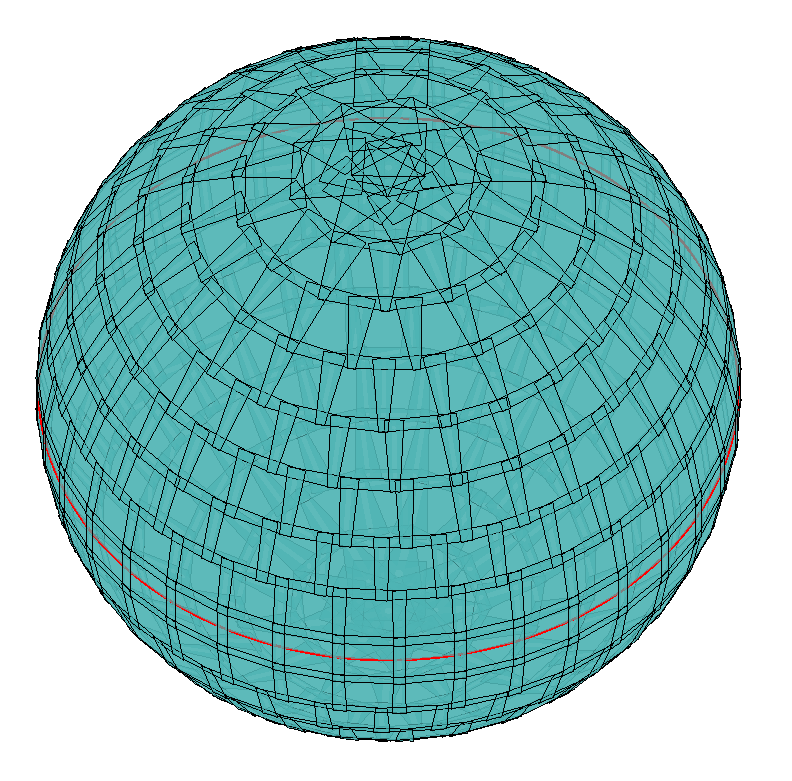

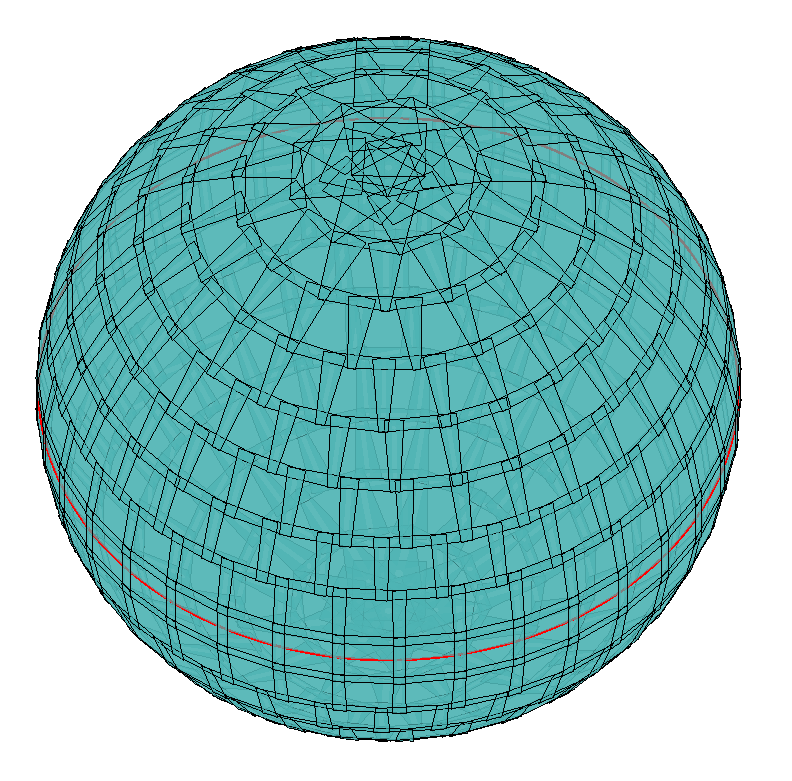

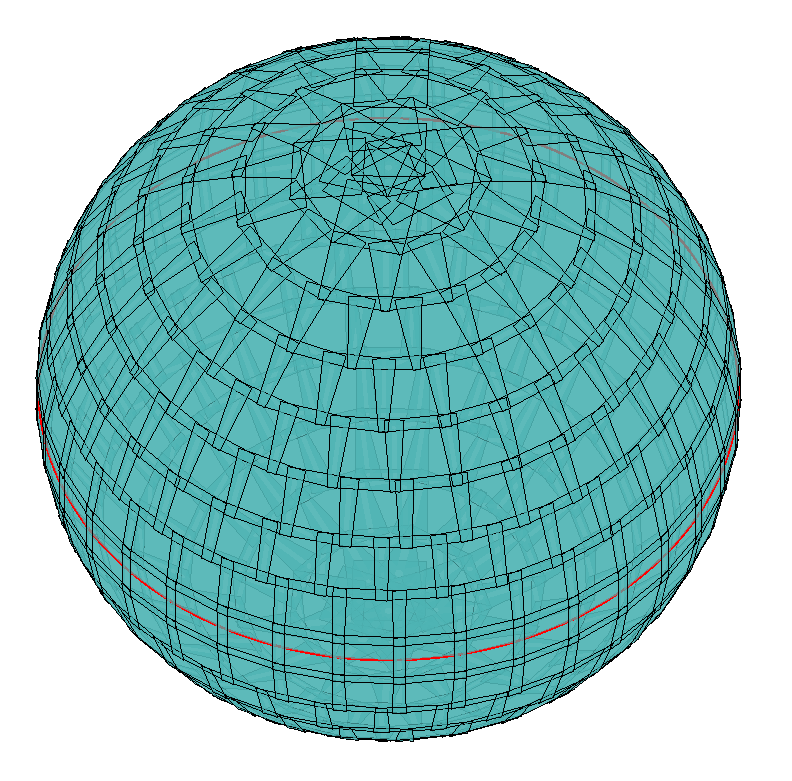

@Brian Boyle No problem. Using 12 x 12 fields with 10 degree centres requires about 430-450 fields, depending on exactly how you interpret the 20% overlap requirement as you get close to the poles. As you move to higher and higher N/S declinations, neighbouring fields overlap at increasingly large angle. So you then have the decision of whether fields should overlap by 20% on average, or by at least 20% everywhere.

There's also the option of moving away from a strict RA/DEC alignment of fields close to the poles and thereby removing the singularities that occur at these points. This could save 10-20 fields by my estimation, but at the cost of introducing more complexity. Probably not worth it I reckon.

Anyhow, here's one option with a reasonably generous interpretation of the 20% overlap requirement that needs 450 fields (versus a theoretical minimum of 413). I avoided putting fields exactly at the poles as in my experience achieving both RA/DEC and rotational alignment here is really hard.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

As of writing, we now have over 40 people signalling interest in taking images for the survey and a similar number interested in the survey.

Before we move to a separate thread I thought I might lay out a high-level strawman for the survey, to get specific feedback and allow others to back out/come in, if the survey is not going in the right direction for them.

Name: Astrobin Community Survey

Principles: Quality, Uniformity, Inclusivity

Field centre-to-centre spacing 10 degrees

Field size 12 degrees x 12 degrees

Pixel Scale 10arcsec/pixel

Field depth SNR ~ 30 per pix @ 22.5mag/arcsec2

Field bandR GB/OSC

Processing. PixInsight Pipeline·: WBPP (with LN) + ABE (1st order fit) + SPCC [Mosaicing of individual panes up to field size in APP or PI?]

Motivation for survey parameters

Field Sizes and Pixel scale:

Permits wide range of focal lengths from 85mm to 200mm, with lesser/greater amounts of drizzling/mosaicing to achieve desired pixel scale.

Field Depth

Based on my SNR calculation [which could be wrong!] of 2hours at Bortle 2 site (21.8), 3 hours at Bortle 3 (21.4) and 4 hours at Bortle 4 for a 67.5mm aperture [135mm lens operated at f/2]. For this I assumed 1000 photons from Vega at top of atmosphere, a mean observing zenithal distance of 45 deg and mean throughput of the optical system of 0.15 [0.25 for the Bayer matrix, and 0.6 for everything else sensor/optics etc]. This is pessimistic, but I think one needs to be for a survey. A big issue here in the use of non-astro modded DSLRs. Here the depth at Ha will need much longer. Do we permit?

Processing

Despite passionate advocates for other software suites, I think PI is the only way to go here. The new WBPP with local normalisation is really very good, and it would be help, I think if imagers also took the extra step of ABE and SPCC. This may be needed in any case to deliver a successful mosaic required to deliver on the 12 x 12 deg field. Ideally I think we should keep to one software package, but my experience of PI mosaicing to date has been very poor. Can someone provide advice here.

In addition to this we need a process for allocating field centre and monitoring progress.

Field Allocation

My initial thought would that it should be random, but now I think that people should just pick their field centres as they are ready to do them, and as they are released by the survey field manager. Perhaps we simply provide a list every lunation for fields within the relevant RA/LST range and people book up field centres as they are ready to attempt them. Once bookup, it would be anticipated that the field would be completed (i.e. observed, processed and quality controlled as acceptable to the survey with four (?) weeks. Otherwise the field centre is returned to the pool for "booking out" again.

This way we avoid a build up unobserved but booked-out fields.

But it also implies close coordination between the Field Distribution Manager and the Quality Control Manager. It also places a fairly heavy burden on the QC manager (and their team). Irrespective of whether we intend to make an all-sky mosaic or not the QC manager is an important role, but it is all the more crucial if we are to attempt an all-sky matrix. The QC Manager and the Curation Manager will need to work closely together too.

As you will the strawman survey design and implementation also presupposes a structure

Field Distribution Manager

Qualiity Control Manager

Curation Manager

possibly each with teams.

No doubt I will have forgotten something, but I think that is quite enough for now!

It is too crazy to think we could start next lunation. Mid-summer for most, I understand, but still some hours available at latitudes <40degrees and mid-winter for us at the top of world. [Depending on your perspective, of course].

CS Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Hi Brian

I agree the new WBPP with local normalisation is great.

Now I'm not sure of this is what you were suggesting... so apologies if I'm doubling up on the same info here but If i can make a small suggestion that may help making it easier to deal with gradient would to apply an ABE 1st order to every single frame before stacking using an image contain in PI.

As you shoot gradients are constantly changing throughout the night. In my experience this will help tremendously keeping a more uniform background and avoid complex gradient in the final stacked image.

Steeve

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

James Tickner:

@Brian Boyle No problem. Using 12 x 12 fields with 10 degree centres requires about 430-450 fields, depending on exactly how you interpret the 20% overlap requirement as you get close to the poles. As you move to higher and higher N/S declinations, neighbouring fields overlap at increasingly large angle. So you then have the decision of whether fields should overlap by 20% on average, or by at least 20% everywhere.

There's also the option of moving away from a strict RA/DEC alignment of fields close to the poles and thereby removing the singularities that occur at these points. This could save 10-20 fields by my estimation, but at the cost of introducing more complexity. Probably not worth it I reckon.

Anyhow, here's one option with a reasonably generous interpretation of the 20% overlap requirement that needs 450 fields (versus a theoretical minimum of 413). I avoided putting fields exactly at the poles as in my experience achieving both RA/DEC and rotational alignment here is really hard.

James,

That looks perfect. Can you post the list of field centres for this. I might like to have a crack at trying to create a field over the next few nights, in the fe hours while the moon is still down.

CS Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Steeve Body:

Hi Brian

I agree the new WBPP with local normalisation is great.

Now I'm not sure of this is what you were suggesting... so apologies if I'm doubling up on the same info here but If i can make a small suggestion that may help making it easier to deal with gradient would to apply an ABE 1st order to every single frame before stacking using an image contain in PI.

As you shoot gradients are constantly changing throughout the night. In my experience this will help tremendously keeping a more uniform background and avoid complex gradient in the final stacked image.

Steeve

Hi Steeve,

Hah! I had never thought of doing that.

I just apply ABE to the final stacked integrated plane as it comes out of WBPP. I have never really had a problem with gradients, but the might be either that I have never noticed gradients at the level which would give problems to a mega-mosaic, or that I do have a really dark sky (Bortle 2).

Is there some way in WBPP that you can include such a low order gradient removal? Otherwise it might make the pre-processing a little more complex.

How do you incorporate it into your pipeline?

CS Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Brian Boyle:

Steeve Body:

Hi Brian

I agree the new WBPP with local normalisation is great.

Now I'm not sure of this is what you were suggesting... so apologies if I'm doubling up on the same info here but If i can make a small suggestion that may help making it easier to deal with gradient would to apply an ABE 1st order to every single frame before stacking using an image contain in PI.

As you shoot gradients are constantly changing throughout the night. In my experience this will help tremendously keeping a more uniform background and avoid complex gradient in the final stacked image.

Steeve

Hi Steeve,

Hah! I had never thought of doing that.

I just apply ABE to the final stacked integrated plane as it comes out of WBPP. I have never really had a problem with gradients, but the might be either that I have never noticed gradients at the level which would give problems to a mega-mosaic, or that I do have a really dark sky (Bortle 2).

Is there some way in WBPP that you can include such a low order gradient removal? Otherwise it might make the pre-processing a little more complex.

How do you incorporate it into your pipeline?

CS Brian

That is something WBPP can't do unfortunately. I'm only suggesting this as one common occurrence could be the moon angle changing throughout the night which may create gradient variance issues unless you are shooting moonless night all the time. This may also help images taken from bortle 3+

What you have to do is first open all the frames you want to stack in an image container in PI and set it to output to a different folder of your choice and them drag and drop an ABE 1st order on the container. You will end up with all frames having a ABE 1st order applied and outputted to the folder. Then you load all these corrected frames in WBPP and stack as normal. on your final stacked image you can then apply another ABE 1st and SPCC and should have have something really solid.

Doesn't take that much longer, but when you are combining data coming from so many different part of the globe this may make a difference.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Doing ABE prior to an integration actually offers no benefit.

Local Normalization adapts the gradients of the individual light frames, which simplifies the gradient in the integrated image and offers better rejection.

Applying ABE to a single frame prior to an integration may be able to remove a gradient from all the images, but it is quite impossible to tell whether it is an unwanted light pollution gradient or a faint nebula or whatever at this stage of the processing. So it can actually harm the final image due to a lack of sufficient data at this stage of processing.

Currently, the ideal work flow is to do WBPP including LN and then removing the gradients after the integration. This gives you the best possibilities to judge the effectiveness of the gradient reduction.

CS Gerrit

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Great discussion. Can I suggest that we leave this to the observer, and only insist on ABE pre-stack if is turns out that are residual gradients with "ABE-post stack". If we are quick on QC, and we have already been alerted to it as a possibility, we should pick this up quickly necessitating minimal re-takes.

I also hope that the field selection might help individuals to pick the best placed fields - either with respect to moon down or distance from any light domes - to miniimize the potential for variable gradients.

CS Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Doing ABE prior to an integration actually offers no benefit.

Local Normalization adapts the gradients of the individual light frames, which simplifies the gradient in the integrated image and offers better rejection.

Applying ABE to a single frame prior to an integration may be able to remove a gradient from all the images, but it is quite impossible to tell whether it is an unwanted light pollution gradient or a faint nebula or whatever at this stage of the processing. So it can actually harm the final image due to a lack of sufficient data at this stage of processing.

Currently, the ideal work flow is to do WBPP including LN and then removing the gradients after the integration. This gives you the best possibilities to judge the effectiveness of the gradient reduction.

CS Gerrit

Fair point that makes sense to me even though I have had good success doing it this way on mosaics in the past without any issues. I know @Jean-Baptiste Auroux is using ABE this way for his pre processing as well even though I don’t think he uses WBPP and does everything manually from what I can gather.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

@Brian Boyle CSV file with field centres attached. They are ordered by increasing DEC then increasing RA, thereby putting the southern hemisphere in its rightful place at the top of the list

field_centres.csv |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Steeve Body:

Doing ABE prior to an integration actually offers no benefit.

Local Normalization adapts the gradients of the individual light frames, which simplifies the gradient in the integrated image and offers better rejection.

Applying ABE to a single frame prior to an integration may be able to remove a gradient from all the images, but it is quite impossible to tell whether it is an unwanted light pollution gradient or a faint nebula or whatever at this stage of the processing. So it can actually harm the final image due to a lack of sufficient data at this stage of processing.

Currently, the ideal work flow is to do WBPP including LN and then removing the gradients after the integration. This gives you the best possibilities to judge the effectiveness of the gradient reduction.

CS Gerrit

Fair point that makes sense to me even though I have had good success doing it this way on mosaics in the past without any issues. I know @Jean-Baptiste Auroux is using ABE this way for his pre processing as well even though I don’t think he uses WBPP and does everything manually from what I can gather.

Steeve, Thanks for understanding. And - just think - if this empirical approach doesn't work think of the bragging rights you will have.

CS Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

James Tickner:

@Brian Boyle CSV file with field centres attached. They are ordered by increasing DEC then increasing RA, thereby putting the southern hemisphere in its rightful place at the top of the list

field_centres.csv

Love your work, James.

CS Brian

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Brian Boyle:

Steeve Body:

Doing ABE prior to an integration actually offers no benefit.

Local Normalization adapts the gradients of the individual light frames, which simplifies the gradient in the integrated image and offers better rejection.

Applying ABE to a single frame prior to an integration may be able to remove a gradient from all the images, but it is quite impossible to tell whether it is an unwanted light pollution gradient or a faint nebula or whatever at this stage of the processing. So it can actually harm the final image due to a lack of sufficient data at this stage of processing.

Currently, the ideal work flow is to do WBPP including LN and then removing the gradients after the integration. This gives you the best possibilities to judge the effectiveness of the gradient reduction.

CS Gerrit

Fair point that makes sense to me even though I have had good success doing it this way on mosaics in the past without any issues. I know @Jean-Baptiste Auroux is using ABE this way for his pre processing as well even though I don’t think he uses WBPP and does everything manually from what I can gather.

Steeve, Thanks for understanding. And - just think - if this empirical approach doesn't work think of the bragging rights you will have.

CS Brian

Haha! No worries mate, no ego here. Happy to participate in the discussion with an open mind  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

|

Personally, I'm not doing WBPP (for a host of reasons) and ABE isn't really useful if you have serious gradients (and don't think they won't be there shooting in B1 skies, they will). I also cannot offer a FF camera (but APSC size is fine) and at the same time dark skies and then those are only in the southern hemisphere.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

I could offer to write down a first draft for a preprocessing and linear post-processing pipeline, including the requirements this puts on the image acquisition.

Would that be helpful for other for better planning, or is it too early to decide on this yet?

@Steeve Body If onw works without WBPP, it could be possible to create a small integration for an LN reference (probably 10 to 20% of your frames for improved SNR), then apply ABE to that integration and then use this as a reference for LN of the whole stack. This offers the advantage of having a higher SNR to start with, thus making it at least easier to determine what is signal and what not, and will remove some gradients from the other frames during the pre processing.

CS Gerrit

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Responding particularly to @Brian Boyle 's workflow document above:

- In discussion with Brian I'm putting together a Google Sheet that would allow people to see which fields still need doing, register their interest and see when they've been allocated a field to image. More soon!

- Great discussion around the workflow for stacking, background removal etc. I think standarising on the tools and scripts is going to be essential, as well as a minimum requirement on image collection (flats, darks, integration time etc)

- I would advocate for NOT asking people to mosaic the images that make up their field themselves. Depending on which tools people use (and there seems to be a lot of options here!) I think we're going to end up with quite variable results. I have another proposal:

- People upload the individual images that make up their field to AB: fully stacked and background corrected, but still linear. They give them a standard title eg SURVEY_FIELD-XXX_Y for image 'Y' of field 'XXX' for example

- AB performs plate solving as usual via PI

- (Big ask of @Salvatore Iovene !) Could we add a menu option that allows a user to resample their image onto a standard RA/DEC aligned 10" grid? I think all the required information is present at this stage: the plate solved solution provides the RA/DEC value at each (x,y) pixel coordinate, so a reverse 2D interpolation transforms the original image onto a regular grid. Happy to give a code example for this if needed.

- (Assuming the last step is feasible) The user then sends a link to the corrected image (presumably showing up as a revision of their original) to the project QC manager

- We now have the question of colour calibration to ensure consistency between panels. My proposal here:

- Ask each contributing user to take an image using the same setup of a calibration region of the sky. I suggest we could nominate 4 such regions equally spaced in RA and close to the equator (so visible from N and S hemispheres). Ideally the regions would be chosen to include some bright features such as reflection/H/O nebulosity and a good star field.

- Users upload their calibration images and register them as described above.

- We provide a 'master' image of each calibration field taken using a canonical set of equipment (to be determined)

- As the user's registed calibration image and the master registered calibration image should be aligned at the pixel level, we can now adjust the colour balance and colour mixing of the user's calibration image to match that of the master image. Essentially this would involve determining the coefficients in the 3 x 3 matrix M such that RGB_master = M * RGB_user. This is a straightforward linear algebra problem with a few tweaks (eg removing clipped pixels). It would be straightforward to code up in Python, Matlab, R etc. I don't know whether PI provides similar functionality.

- Armed with the coefficients of matrix M for a given user's setup, the user's field images can now be easily scaled to give colour and brightness consistent with the canonical equipment setup.

- One importance caveat: H-alpha and S3 contributions would be hard to fix, given that OSC or RGB(L) provides only a single measurement of 'red' light, but continuum red starlight contributions and Ha/S3 contributions could meet very different equipment sensitivity. I can't think of a good solution, short of either excluding unmodded OSC cameras completely, or restricting them to fields that don't have significant Ha/S3 nebulosity.

- At this point, I'm not sure of what framework to use for the colour calibration process. If it could all be done within a script in PI, then we could push this back onto users. If not, then maybe the QC manager would need to tackle this with some custom code.

- Finally (!) the registered and colour-calibrated images can now go to the curation manager to drop into the master image. This is going to be large - 5-8 billion pixels with 6-12 bytes per pixel depending on whether we go with 16 bit or 32 bit values. So potentially up to 100 GB. Again, a simple custom script is going to be needed to drop the images into the correct location in the master.

Sorry for the long post! Thoughts appreciated.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Christian Bennich:

A thought here ☝️

@astrobiscuit running the BAT (Big Amateur Telescope https://www.astrobiscuit.com/big-amateur-telescope) maybe he/they have some experience to share about data collection, integration etc. 🤷♂️

@Big_Amateur_Telescope

Yes but we have turned down the suggestions of mosaics or surveys repeatingly due to an insane amount of organizational demand.

1st your gonna need people who are interested in shooting a patch of sky where there is nothing there

2nd your gonna need good optics. From working with bat data, I can tell you that that is far from the norm, from the data uploaded towards bat, if its a good batch, probably only 50% are useable. So that cuts down the amount of people who can parttake

3rd The issue with Lightpollution is a real issue...If you sort by patches, some patches will not be very deep. Imo this project can only be done with very strict rules. I.e. like no moon, b3 and below, exposure time must be set to a specific time, different fratios to be accounted for etc..

A whole sky survey is crazy, a constellation tho, more doable if you find enough people for it.

(also currently don't have access to the BAT account, this is about as official an answer you will be able to get from us xd)

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Brian Boyle:

Christian Bennich:

A thought here ☝️

@astrobiscuit is running the BAT (Big Amateur Telescope https://www.astrobiscuit.com/big-amateur-telescope) maybe he/they have some experience to share about data collection, integration etc. 🤷♂️

Thats really interesting - this proposal is sort of an inverse BAT.

Is astrobiscuit on astrobin?

No, the account is run by me for Astrobiscuit. The other one is indeed his account, but I'm pretty sure its no longer in use.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Hi guys,

I just finished the first draft of the preprocessing and linear post-processing guide to offer some clarifiction in this regard. See this link: https://docs.google.com/document/d/1_IdBbbtzMk3pUuYUPi0JJWMvjsvRtPmj/edit?usp=sharing&ouid=102793495713995642568&rtpof=true&sd=true

This is just a first draft, nothing is final, and everything is open for debate. Feel free to add comments if you think something is inadequate or should be changed for whatever reason.

The requirements for image acquisition were derived from the requirements of different algorithms used in the preprocessing to work properly.

@James Tickner I would opt for SPCC as the color calibration tool of choice. It has a huge database of stars all over the sky, so there are enough of them in every possible field. Stellar photometry offers more accurate measurements of color, and it is better adaptable to different equipment. Also, it can handle multiplicative and additive color shifts just fine, so it covers every aspect of color calibration.

Edit: I removed the document and added a Google Drive link for better collaboration capabilities.

CS Gerrit

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

|

I feel most of the "requirements" are either the defaults or are questionable, especially the SPCC requirements. SPCC calibration stars are quite sparse at high galactic latitudes and I can hardly see the point of using it in preference of PCC, especially if using OSC.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

You are definitely right about the requirements part. Most of them are standard, but since we will be working with a lot of different people with different experiences and different equipment, I also included some basic stuff like darks in the guide. Just for the sake of uniformity and clarity.

The whole SPCC vs PCC debate will probably be a little too much to keep this thread focused on the topic. Most of my other ideas were aimed at providing the same goals, uniformity and accuracy over a huge rage of possible equipment, locations and conditions.

As I already mentioned in the beginning, this is just a draft. Everything can be changed if people feel it unnecessary or inaccurate. Just comment on it.

CS Gerrit

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

to create to post a reply.