David Huff:

andrea tasselli:

So after all is said and done your integrated seeing is just 2.7". Which isn't bad at all but certainly not exceptional which would prompt the other question: was it worth it when you can get that sort of result with just "normal" long integrations?

This is fast imaging not lucky imaging where you would only keep a percentage of frames with the best FWHM, fast imaging you have to keep all the frames, even the blurry ones to add to the SNR. So sharpness wasn't the goal here, SNR was. Where I live, M81 is right over the skyglow of Chicago, and every time I tried imaging this galaxy, the light pollution would ruin it. I had some success with my RASA 8" but now the galaxy was so small you can't see any details. So if anything, taking so many short exposures really helped reject most of the light pollution! I may one day use subframe selector to go through all of the data on only keep the best PSF Signal Weight images. But for now I was actually able to image something I could not before at a longer focal length because of the light pollution. See the images below and you will see my rejections is mostly light polluted shot noise :-) Yes some had high clouds but not this bad.

Have you actually considered culling soft frames to optimize your FWHM? Since that seemed to be one of your goals from the original topic, and given you have fifty thousand frames...that I am assuming were not very dithered if dithered at all...then your SNR could very well be limited by FPN long before stacking a full 50k frames.

You could probably keep half of them that have the best FWHM, integrate, and find your SNR is actually darn close to the full 50k stack, if you are indeed FPN limited. If that is the case, then you may well be able to improve your FWHM. You may need to cull even more than 50%, but if you did have pockets of better seeing, and you stack only the best subs, you should be able to realize improved resolution. Doubtful that it would be diffraction limited, but, better than stacking the whole lot. It doesn't take that many soft subs to soften up the whole image, and culling the worst will offer some improvement. Then keeping only the best (within some range to maintain a reasonable SNR) should improve details, to one degree or another.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

|

What are the differences between Fast Integration and good ole' Integration?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

What are the differences between Fast Integration and good ole' Integration?

It's an isomorphic transformation between subsequent pairs of images that doesn't allow for quality weighting, local normalization and global pixel rejection algorithm such as is the case for the "good ole' ImageIntegration".

In other words is very fast and reaches (about) the same goals as the standard integration on statistical basis when adding thousands of images. This is what is called Fast Imaging.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Jon Rista:

David Huff:

andrea tasselli:

So after all is said and done your integrated seeing is just 2.7". Which isn't bad at all but certainly not exceptional which would prompt the other question: was it worth it when you can get that sort of result with just "normal" long integrations?

This is fast imaging not lucky imaging where you would only keep a percentage of frames with the best FWHM, fast imaging you have to keep all the frames, even the blurry ones to add to the SNR. So sharpness wasn't the goal here, SNR was. Where I live, M81 is right over the skyglow of Chicago, and every time I tried imaging this galaxy, the light pollution would ruin it. I had some success with my RASA 8" but now the galaxy was so small you can't see any details. So if anything, taking so many short exposures really helped reject most of the light pollution! I may one day use subframe selector to go through all of the data on only keep the best PSF Signal Weight images. But for now I was actually able to image something I could not before at a longer focal length because of the light pollution. See the images below and you will see my rejections is mostly light polluted shot noise :-) Yes some had high clouds but not this bad.

Have you actually considered culling soft frames to optimize your FWHM? Since that seemed to be one of your goals from the original topic, and given you have fifty thousand frames...that I am assuming were not very dithered if dithered at all...then your SNR could very well be limited by FPN long before stacking a full 50k frames.

You could probably keep half of them that have the best FWHM, integrate, and find your SNR is actually darn close to the full 50k stack, if you are indeed FPN limited. If that is the case, then you may well be able to improve your FWHM. You may need to cull even more than 50%, but if you did have pockets of better seeing, and you stack only the best subs, you should be able to realize improved resolution. Doubtful that it would be diffraction limited, but, better than stacking the whole lot. It doesn't take that many soft subs to soften up the whole image, and culling the worst will offer some improvement. Then keeping only the best (within some range to maintain a reasonable SNR) should improve details, to one degree or another.

Hi Jon,

Yes I am considering culling data. At the moment I am working on a M51 image and so far running it through subframe selector on PSFSignalWeight measured debayered images and I see a decrease in the FWHM and the stacked images looks even better. The other change is I am actually using the camera I wanted to use in the first place which is an IMX533 camera with 3.76um pixels with the APEX-ED 0.65 reducer/corrector gives me a pixel scale of 0.74. but so far my FWHM is smaller than with my M81 image so already an improvement there. Also I only had to cull 25% so far to get this improvement. I did see that I really didn't get too much SNR improvement after 30K frames so I can cull a lot before I see any degradation. And of course I will report and update the first page on my findings. I am also working on a way to use PixInsight native scripts instead of python to make this more usable for the community. So far ironically making the python script actually helped me figure out how to do the same with PixInsight's ImageFileManager script on combination with subframe selector.

I forgot to mention I always dither 20px but with this it was every 30th frame.

Thanks for your comment!

Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

What are the differences between Fast Integration and good ole' Integration?

Specifically regarding the FastIntegration process in PI, it is highly optimized for integrating a huge number of subs as fast as possible. It requires calibrated frames, and will perform registration and stacking for you. Its more of a streaming integration tool, where it will progressively stack (as I understand it) one batch of subs after another, allowing images to be integrated from insane numbers of subs (hundreds of thousands or more).

I question the viability of it, really, outside of just smoothing out the brightest objects in a field, due to FPN. I doubt it would do much for SNR, beyond a certain point. To acquire such huge frame counts, you would have to be acquiring frames so fast that you couldn't dither. Any random movements and drift, would not only not be proper or sufficient dithering, but they are most likely going to lead to correlated noise in the stack. Even with calibration, there is usually some degree of remnant FPN. With stacking a couple hundred subs, this remnant wouldn't be an issue, but tens of thousands to hundreds of thousands of subs, it probably would be. Once you are FPN limited, additional stacking wouldn't be beneficial, but it can be really tough (and very tedious) to identify when you become FPN limited.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

What are the differences between Fast Integration and good ole' Integration?

It's an isomorphic transformation between subsequent pairs of images that doesn't allow for quality weighting, local normalization and global pixel rejection algorithm such as is the case for the "good ole' ImageIntegration".

In other words is very fast and reaches (about) the same goals as the standard integration on statistical basis when adding thousands of images. This is what is called Fast Imaging.

Yes and I should add it is insanely fast when using defaults and maxing out the prefetch and integration buffers. The FastIntegration process does the star alignment, interpolation, rejection, and stacking for you and even creates drizzle files if the option is checked. Only downside is because it doesn't have the option for image weighting and local normalization, you data has to be really clean with the exception of the occasional satellite or airplane.

So all you have to do is calibrate your data and debayer if needed then run FastIntegration, and if you want drizzle and youre done! I was able to process 50,000+ images in about 7 hours. 1000 images takes minutes! But with this tool more is better.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

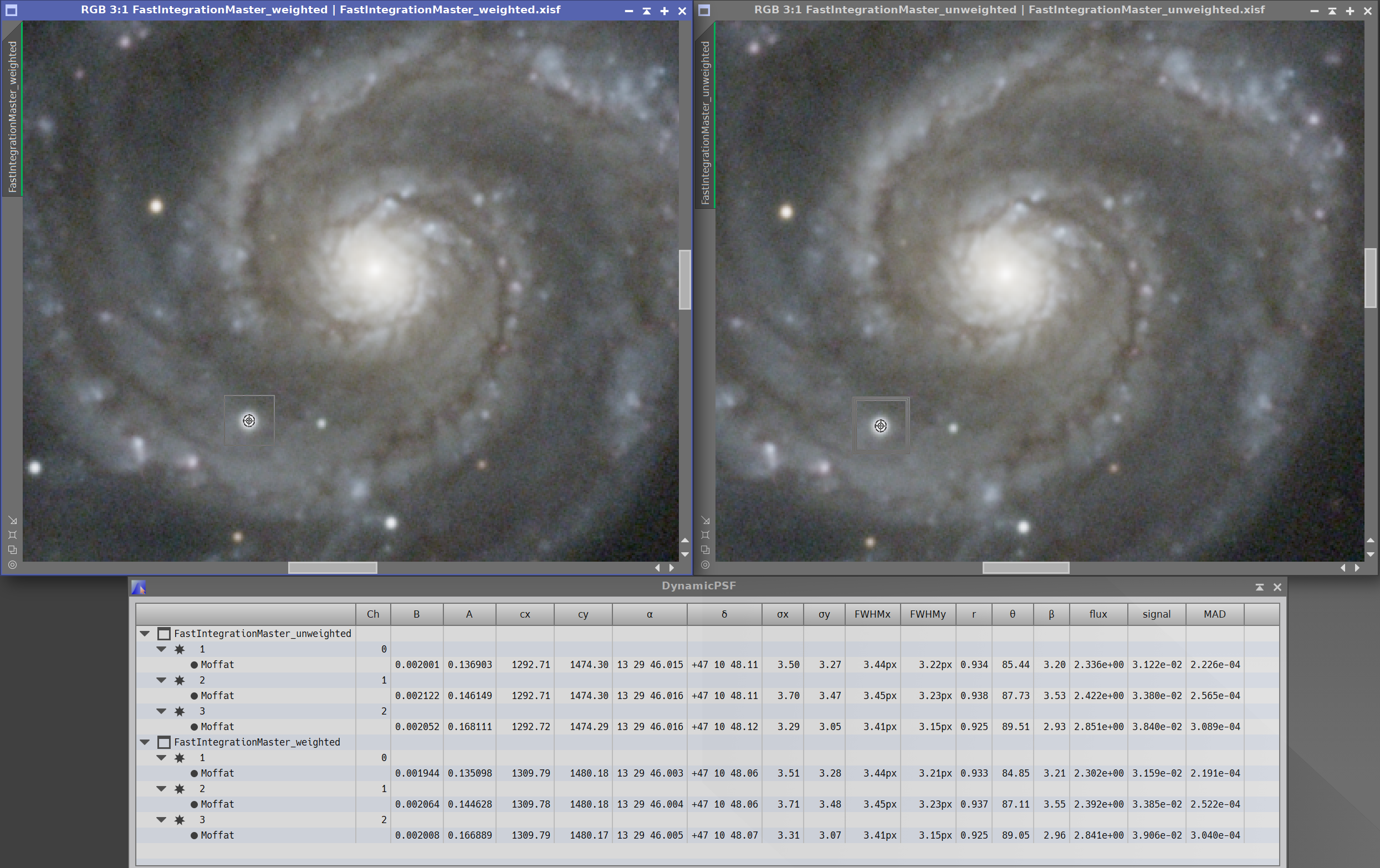

I just had a discussion in the PixInsight forum where I wanted to know if image quality could be increased by re-ordering the subframes by PSF signal weight which also put the subframes with the lowest FWHM at the beginning. What I discovered after performing a test where I sorted the subframes by PSF signal weight (the weighted image) and the captured order (the unweighted image) and the difference is so subtle that I don't feel it is worth the effort.

As you can see in the screenshot below the difference the caputed sequence order and the reordered by PSF signal weight is almost Null. There is a extremely subtle improvement in the image that has the subframes ordered by PSF signal weight but not enough to warrant all the extra effort.

The images were only gradient corrected and color corrected then the same screen stretch applied to reduce the brightness to see the details.

For those who are curious the scope and camera combo produces an image scale of 0.74 seconds of arc.

I updated the main post to reflect this finding. This makes it even more important to have quality subframes for the FastIntegration process.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Hi All,

Here is my completed image of M51 using what I learned from the M81 project. This time I had the appropriate camera and was able to capture it using optimum sampling while still using the fast imaging technique.

https://www.astrobin.com/lmaor8/

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

David,

I really like all your efforts to figure out how to ring every last bit of sharpness out of your scope. However, I'm not so sure that all the trouble that you are going to to take so many short exposures is helping very much and it's adding a lot of overhead to your processing steps. I just did a quick survey of other images of M51 taken with longer exposures using an 8" scope and many of the better ones reveal pretty much the same (and sometimes slightly more) detail than your image. Here is just one example: https://www.astrobin.com/dnbvnw/0/ that integrated 180s exposures for a total time of nearly 12 hours. This isn't to say that I don't like your image--I do, but you might stop to consider just how much real benefit you are getting from using a huge stack of really short exposures. Whatever benefit I might see, does't look like it's worth all of the added hassle of dealing with that much data.

John

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

John Hayes:

David,

I really like all your efforts to figure out how to ring every last bit of sharpness out of your scope. However, I'm not so sure that all the trouble that you are going to to take so many short exposures is helping very much and it's adding a lot of overhead to your processing steps. I just did a quick survey of other images of M51 taken with longer exposures using an 8" scope and many of the better ones reveal pretty much the same (and sometimes slightly more) detail than your image. Here is just one example: https://www.astrobin.com/dnbvnw/0/ that integrated 180s exposures for a total time of nearly 12 hours. This isn't to say that I don't like your image--I do, but you might stop to consider just how much real benefit you are getting from using a huge stack of really short exposures. Whatever benefit I might see, doesn't look like it's worth all of the added hassle of dealing with that much data.

John

Hi John,

One of the main reasons why I take so many exposures is to make drizzling more effective. My first attempt didn't allow much drizzling because it was too oversampled as I was forced to use a camera with smaller pixels because the one I wanted to use malfunctioned. This time I finally got my replacement camera from the manufacturer with bigger pixels and combined with the reducer it was properly sampled for my seeing conditions. Which also means this time I could do a 2x drizzle to both counteract the bayer filter and recover some spatial resolution. I was also able to get the pixel scale down to where I wanted without dry patches in my drizzled image. Ideally I would like to use a camera with bigger pixels then I could get rid of the focal reducer but I am working with what I have at the moment. The link you posted shows this person has a beefer mount, bigger pixel camera, and it's mono, so I would expect the image to look better. But I am working with what I have at the moment until my kids are done with college and get some debts paid off.

I learned a lot from this experience though. Coming from using a RASA and Hyperstar for last 2 years I had to re-learn some aspects of sampling using a longer focal length. I finally got it in my head that F-ratio does not matter if you have the right aperture and pixel size matched to you seeing conditions. I have a pretty beefy computer with lots of horsepower and storage being a computer geek so I have no problem processing thousands of images. And with the new FastIntegration process from Pixinsight, it's a piece of cake now and yes I know I kinda went overboard with the first image LOL.

Thanks for your comment as I appreciate your advice. Sometimes the best way to learn something new is to experiment as regardless of the outcome a lesson will be learned. All this cost me was time but I felt it was time well spent :-)

Clear Skies!

Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

I think the most important part of drizzle is good dithering, not the number of subs.

Deep sky 'lucky imaging' should be feasible for very large telescopes that can afford reducing the exposure time bellow 1 sec.

Would be interesting to see what a 1 meter telescope could achieve

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

David Huff:

All this cost me was time but I felt it was time well spent :-)

Dave, I just want to say thanks for taking the time to experiment with a different approach and sharing your experience and data with all of us. I'm still reading and googling everything mentioned (which may take me years to fully understand) but I think this type of activity is an extremely valuable contribution. As with any endeavour. you might find the overhead isn't worth the added benefit in imaging. I do think it's critical to test those theories out though. I can guarantee there are loads of members with more technical insight here, but I really enjoyed watching this trial of yours unfold and learning from it.

I'm inspired to go spend a few more hours arguing with Fusion 360 on how to design a better EAF mount for a Takahashi Doublet

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

John Hayes:

David,

This is a nice project but I am skeptical of your initial claim that this is a diffraction limited image. First, fast imaging does not remove the effects of atmospheric wavefront distortion. It can produce a diffraction limited image of the Airy pattern--IF the seeing is sufficiently good and the exposure is sufficiently short. Second, the isokinetic patch is typically pretty small and with tilt typically being the largest component in atmospheric wavefront distortion, it will vary over the field. When you stack a lot of images, the tilt won't be constant across the field and the increase in image sharpness gained from short exposures will be seriously diluted (relative to the diffraction limit.) The diameter of the Airy disk for your 200 mm f/8 system is about 1.4" but the FWHM will be 0.58". Did you measure the FWHM of the stars in your image to confirm that they are about 0.6" FWHM?

You have produced a nice, sharp image but is it really diffraction limited?

John

At first I was like, that is a very interesting topic... then I read your comments and my brain exploded.

I should not come on astrobin without caffeine in my body.

Karl

*Edit: Finally this topic would be interesting if I could understand it lol. You guys are nuclear rocket scientist.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

AdrianC.:

I think the most important part of drizzle is good dithering, not the number of subs.

Deep sky 'lucky imaging' should be feasible for very large telescopes that can afford reducing the exposure time bellow 1 sec.

Would be interesting to see what a 1 meter telescope could achieve

Yes dithering is very important which is why I dither ever frame! Also when you increase in scale and reduce the drop shrink, the more subs the better! There is an experiment using a camera called LuckyCam which is a EMCCD camera that does exactly was you wondered about. As you can see in this article there is an reduction in FWHM with faster frame rates but the EMCCD camera is more efficient in capturing these faint photons. This method was the inspiration for why I tested this method but the sheer number of frames is insane.

https://www.aanda.org/articles/aa/full/2006/05/aa3695-05/aa3695-05.right.html |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Nick Grundy:

David Huff:

All this cost me was time but I felt it was time well spent :-)

Dave, I just want to say thanks for taking the time to experiment with a different approach and sharing your experience and data with all of us. I'm still reading and googling everything mentioned (which may take me years to fully understand) but I think this type of activity is an extremely valuable contribution. As with any endeavour. you might find the overhead isn't worth the added benefit in imaging. I do think it's critical to test those theories out though. I can guarantee there are loads of members with more technical insight here, but I really enjoyed watching this trial of yours unfold and learning from it.

I'm inspired to go spend a few more hours arguing with Fusion 360 on how to design a better EAF mount for a Takahashi Doublet

Thanks Nick. Yes nothing groundbreaking here, yet as I am still learning but it was actually fun doing this to see what happens. I enjoy testing new ways to push lower end but high quality equipment to it's limits to see what the amature astrophotographer and possibly citizen scientist can do with off the shelf equipment. I came to astronomy with an engineering mindset and this is what happens LOL.

CS, Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

David Huff:

Yes dithering is very important which is why I dither ever frame! Also when you increase in scale and reduce the drop shrink, the more subs the better! There is an experiment using a camera called LuckyCam which is a EMCCD camera that does exactly was you wondered about. As you can see in this article there is an reduction in FWHM with faster frame rates but the EMCCD camera is more efficient in capturing these faint photons. This method was the inspiration for why I tested this method but the sheer number of frames is insane.

https://www.aanda.org/articles/aa/full/2006/05/aa3695-05/aa3695-05.right.html

Except that isn't really applicable to your case.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

David Huff:

AdrianC.:

I think the most important part of drizzle is good dithering, not the number of subs.

Deep sky 'lucky imaging' should be feasible for very large telescopes that can afford reducing the exposure time bellow 1 sec.

Would be interesting to see what a 1 meter telescope could achieve

Yes dithering is very important which is why I dither ever frame! Also when you increase in scale and reduce the drop shrink, the more subs the better! There is an experiment using a camera called LuckyCam which is a EMCCD camera that does exactly was you wondered about. As you can see in this article there is an reduction in FWHM with faster frame rates but the EMCCD camera is more efficient in capturing these faint photons. This method was the inspiration for why I tested this method but the sheer number of frames is insane.

https://www.aanda.org/articles/aa/full/2006/05/aa3695-05/aa3695-05.right.html

Thanks for the article link. As suspected the method works with big aperture scopes. Unfortunately such instruments are beyond our hobby  |

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

I have a question way less scientific for you guys.

I always assumed that taking longer exposure was better to get some dynamic range. I also assumed that using different exposition lenght was also increasing de dynamic range a bit like normal HDR photography.

With what I see and the little I understand from this topic, is that it dosent matter that much and that a 1200 sec exposure is somewhat equal to 1200x 1 sec exposure?

So les say at my location I get my best image if I limit my exposure to 1min, it would be beneficial for me to take a bunch of 1 min image with better overall subframe quality than less 5 min frame which I end up throwing 50% cuz of bad eccentricity or else.

Also when using fast integration I should only keep the best frame because there is not evaluation of the frame carried ou during processing?

Thanks

Karl

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Karl Theberge:

I have a question way less scientific for you guys.

I always assumed that taking longer exposure was better to get some dynamic range. I also assumed that using different exposition lenght was also increasing de dynamic range a bit like normal HDR photography.

With what I see and the little I understand from this topic, is that it dosent matter that much and that a 1200 sec exposure is somewhat equal to 1200x 1 sec exposure?

So les say at my location I get my best image if I limit my exposure to 1min, it would be beneficial for me to take a bunch of 1 min image with better overall subframe quality than less 5 min frame which I end up throwing 50% cuz of bad eccentricity or else.

Also when using fast integration I should only keep the best frame because there is not evaluation of the frame carried ou during processing?

Thanks

Karl

One of the benefits to short exposures is less dependency on guiding. Let's say your guide scope broke, now the only way to make sure you didn't get shaky images was to limit your exposures. With the FastIntegration process, as long as some of those bad frames didn't introduce alignment errors, they would be added to increase SNR. As long as the reference frame has good PSF, eccentricity, low background brightness and lower FWHM, the resulting image should still be good even with the bad frames. From what I understand, photons are collected regardless of the time frame so it is the sum total of all of the frames that matters aka total integration time. Longer exposures to permit the sensor to use its full well which will allow more fainter photons to be captured. This is why some do a lot of shorter luminance frames and fewer longer color frames. Another consideration is sky brightness that can saturate pixels if exposed too long so always good to test captures and analyze the histogram to make sure data is not being clipped. I hope that makes sense as I am not an expert but learned this from experience.

CS,

Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

Karl Theberge:

I have a question way less scientific for you guys.

I always assumed that taking longer exposure was better to get some dynamic range. I also assumed that using different exposition lenght was also increasing de dynamic range a bit like normal HDR photography.

With what I see and the little I understand from this topic, is that it dosent matter that much and that a 1200 sec exposure is somewhat equal to 1200x 1 sec exposure?

So les say at my location I get my best image if I limit my exposure to 1min, it would be beneficial for me to take a bunch of 1 min image with better overall subframe quality than less 5 min frame which I end up throwing 50% cuz of bad eccentricity or else.

Also when using fast integration I should only keep the best frame because there is not evaluation of the frame carried ou during processing?

Thanks

Karl

*Unfortunately no, 1200x1 sec does not equal 1x1200 sec.

The exposure time per sub and total can be computed given a whole range of parameters.

If you Google CCD exposure time equation you will find examples. I wrote my own python scripts, but in general for amateur work you can do close approximations. Why? Because amateurs don't use photometric filters

Watch R.Glover presentation on YouTube regarding exposure time per sub.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

AdrianC.:

Karl Theberge:

I have a question way less scientific for you guys.

I always assumed that taking longer exposure was better to get some dynamic range. I also assumed that using different exposition lenght was also increasing de dynamic range a bit like normal HDR photography.

With what I see and the little I understand from this topic, is that it dosent matter that much and that a 1200 sec exposure is somewhat equal to 1200x 1 sec exposure?

So les say at my location I get my best image if I limit my exposure to 1min, it would be beneficial for me to take a bunch of 1 min image with better overall subframe quality than less 5 min frame which I end up throwing 50% cuz of bad eccentricity or else.

Also when using fast integration I should only keep the best frame because there is not evaluation of the frame carried ou during processing?

Thanks

Karl

*Unfortunately no, 1200x1 sec does not equal 1x1200 sec.

The exposure time per sub and total can be computed given a whole range of parameters.

If you Google CCD exposure time equation you will find examples. I wrote my own python scripts, but in general for amateur work you can do close approximations. Why? Because amateurs don't use photometric filters

Watch R.Glover presentation on YouTube regarding exposure time per sub.

SharpCap Smart Histogram is a good tool to use to find the optimum exposure time. For my last image, the program showed that 3.4 seconds would allow 5% noise and 60 seconds for 1% noise with my sky brightness and camera profile. I choose 4 seconds as it would allow me to capture shorter subs while not introducing more noise. Yes I could have took 60 second subs but for this "experiment" 4 seconds was optimal and any less would have required more subs. Again, this was an experiment and not trying to prove anything. Just testing a hypothesis and sharing my results.

CS,

Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

AdrianC.:

Karl Theberge:

I have a question way less scientific for you guys.

I always assumed that taking longer exposure was better to get some dynamic range. I also assumed that using different exposition lenght was also increasing de dynamic range a bit like normal HDR photography.

With what I see and the little I understand from this topic, is that it dosent matter that much and that a 1200 sec exposure is somewhat equal to 1200x 1 sec exposure?

So les say at my location I get my best image if I limit my exposure to 1min, it would be beneficial for me to take a bunch of 1 min image with better overall subframe quality than less 5 min frame which I end up throwing 50% cuz of bad eccentricity or else.

Also when using fast integration I should only keep the best frame because there is not evaluation of the frame carried ou during processing?

Thanks

Karl

*Unfortunately no, 1200x1 sec does not equal 1x1200 sec.

The exposure time per sub and total can be computed given a whole range of parameters.

If you Google CCD exposure time equation you will find examples. I wrote my own python scripts, but in general for amateur work you can do close approximations. Why? Because amateurs don't use photometric filters

Watch R.Glover presentation on YouTube regarding exposure time per sub.

*Actually, it is pretty strightforward:

Which goes to show that the difference is in the sigma-squared factor i.e., the RON^2/t and the SNR is proportional to the square root of the integrated time which is independent on the number of integrations.

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

andrea tasselli:

AdrianC.:

Karl Theberge:

I have a question way less scientific for you guys.

I always assumed that taking longer exposure was better to get some dynamic range. I also assumed that using different exposition lenght was also increasing de dynamic range a bit like normal HDR photography.

With what I see and the little I understand from this topic, is that it dosent matter that much and that a 1200 sec exposure is somewhat equal to 1200x 1 sec exposure?

So les say at my location I get my best image if I limit my exposure to 1min, it would be beneficial for me to take a bunch of 1 min image with better overall subframe quality than less 5 min frame which I end up throwing 50% cuz of bad eccentricity or else.

Also when using fast integration I should only keep the best frame because there is not evaluation of the frame carried ou during processing?

Thanks

Karl

*Unfortunately no, 1200x1 sec does not equal 1x1200 sec.

The exposure time per sub and total can be computed given a whole range of parameters.

If you Google CCD exposure time equation you will find examples. I wrote my own python scripts, but in general for amateur work you can do close approximations. Why? Because amateurs don't use photometric filters

Watch R.Glover presentation on YouTube regarding exposure time per sub.

*Actually, it is pretty strightforward:

Which goes to show that the difference is in the sigma-squared factor i.e., the RON^2/t and the SNR is proportional to the square root of the integrated time which is independent on the number of integrations.

On close inspection yes but if you want to compute it's not so easy ... What is your sky background magnitude in different astrodon filters? Did you measure? if so how did you calibrate (what is the zero point in the image) the magnitude since astrodon filters are not used by professionals .... but sloan or bvri etc

Get my point?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

AdrianC.:

On close inspection yes but if you want to compute it's not so easy ... What is your sky background magnitude in different astrodon filters? Did you measure? if so how did you calibrate (what is the zero point in the image) the magnitude since astrodon filters are not used by professionals .... but sloan or bvri etc

Get my point?

Not quite. I'm only in the business of establishing relationships not actually calculating a specific SNR. Why Astrodon, btw?

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

This is why I just paid the $20 for SharpCap Pro as it does a pretty accurate sensor analysis, then right before I image a subject, I run the smart histogram which measures the sky background using the camera and filter I choose, then calculates against the e/ADUs and gain of the camera against the same formula you have shown to display the optimum exposure, gain, and offset to maximize the exposure time with options of how much signal noise you want to allow. I used to use the script with Pixinsight and it wasn't very accurate. Dr. Robin Glover made an easy way to calculate the proper exposures without all the guesswork. This is also very useful for narrowband filters especially the photometric (SLOAN/SDSS or UVBRI) as it takes your camera quantum efficiency for that wavelength into account. Worked great for my IMX 585 camera with the SLOAN z-s filter as near infrared can be tricky.

CS!

Dave

|

You cannot like this item. Reason: "ANONYMOUS".

You cannot remove your like from this item.

Editing a post is only allowed within 24 hours after creating it.

You cannot Like this post because the topic is closed.

to create to post a reply.